The DBA improvements in this release is much more interesting and can push seriously the adoption in the enterprises of this DBMS.

Database administration is weird, nobody can tell what does looks like. You have to find out by yourself.

Saturday, 20 December 2014

Brand new elephant

So finally PostgreSQL 9.4 has been released. This version introduces a new schema less type, the jsonb which joins the other fantastic features abused and misused by the developers all around the globe.

The DBA improvements in this release is much more interesting and can push seriously the adoption in the enterprises of this DBMS.

The DBA improvements in this release is much more interesting and can push seriously the adoption in the enterprises of this DBMS.

Thursday, 23 October 2014

PostgreSQL, the big, the fast and the ugly

Because an industrial action is taking place in Italy my flight was cancelled.

I'm not going to the Linux Day in person but, thanks Google, I should be able to talk.

I'll start Saturday 15.00 CEST.

I'm not going to the Linux Day in person but, thanks Google, I should be able to talk.

I'll start Saturday 15.00 CEST.

Sunday, 5 October 2014

PostgreSQL and Big Data Talk

After a couple of month dealing with MySQL and wondering how is possible to rely on such fragile system, I'm back to PostgreSQL.

I had the fantastic news my PostgreSQL and big data talk was accepted at the Italian Linux Day 2014 organised by the Ferrara Linux User Group.

The talk will be obviously in Italian and will be very likely available in streaming.

The talk schedule is very interesting indeed and covers the entire day. If you are in Ferrara the 25th of October please pop in the Faculty of Engineering.

See you in Ferrara then.

I had the fantastic news my PostgreSQL and big data talk was accepted at the Italian Linux Day 2014 organised by the Ferrara Linux User Group.

The talk will be obviously in Italian and will be very likely available in streaming.

The talk schedule is very interesting indeed and covers the entire day. If you are in Ferrara the 25th of October please pop in the Faculty of Engineering.

See you in Ferrara then.

Tuesday, 16 September 2014

Book done!

The book is now complete. There's still a lot to do for reviewing the writing and fixing the bad grammar. Anyway is about 107 pages and I'm pretty satisfied. I've worked on this document for 4 months in my spare time and I became more confident with my English during the writing.

So, what's next? I'll spend the next couple of weeks reviewing and fixing the book. After that I'll put the pdf on lulu.com free for download and in hardcopy. The latter will have a production/shipping cost of course. I'll also put the mobi version on amazon for kindle distribution. Unfortunately amazon doesn't allow free books. I'll put the minimum price possible 0.99$. Anyway if anybody wants the mobi for free just give me a shout.

I've already started the second volume. This one will cover the PostgreSQL's advanced topics like the memory manager and the HA.

And now there are the final two sections. The restore performance and the dba advice.

The other face of the coin is the post-data section. Because the objects in this section are mostly random access operations, the completion can require more time than the data section itself; even if the size of the resulting objects is smaller than the table's data. This happens because the unavoidable sort operations are CPU and memory bound. The parallel restore gives some advantage, but as seen in 10.2 each loop's process is single threaded.

Setting up an emergency postgresql.conf file can speed up the restore, reducing the time up to 40% than the production's configuration. What it follows assumes the production's database is lost and the data is restored from a custom format's backup.

Despite the strange reputation, a database expert is an incredible resource for building up efficient and scalable designs. Nowadays is very simple to set up a PostgreSQL instance. Even with the default configuration parameter the system is so efficient that basically doesn't show any problem when running at low or medium load. If this, at first sight, seems a fantastic feature actually is a really bad thing. Any mistake at design level is hidden and when the problem sooner or later appears is too late.

This final advice is probably the most important of the entire chapter. If you have a DBA don't be shy. Ask for any suggestion, even if the solution seems obvious or if the task is small. The database layer is a completely different universe. A small mistake can result in a very big problem.

Of course if there's no DBA, that's bad. Never sail without a compass. Never start a database project without an expert. A professional to take care of the most important part, the foundations.

If this is the case, ask your employer to hire a PostgreSQL DBA as soon as possible.

So, what's next? I'll spend the next couple of weeks reviewing and fixing the book. After that I'll put the pdf on lulu.com free for download and in hardcopy. The latter will have a production/shipping cost of course. I'll also put the mobi version on amazon for kindle distribution. Unfortunately amazon doesn't allow free books. I'll put the minimum price possible 0.99$. Anyway if anybody wants the mobi for free just give me a shout.

I've already started the second volume. This one will cover the PostgreSQL's advanced topics like the memory manager and the HA.

And now there are the final two sections. The restore performance and the dba advice.

Restore performances

When restoring a database, in particular in a disaster recovery scenario, the main goal is to have the data back on line as fast as possible. Usually the data section's restore is fast. If the dump has been taken using the copy statements, which are enabled by default, the reload requires a fraction of the entire restore's time. Taking the advantage of the parallel jobs, available for the custom and directory format, it's still possible to improve the data section's reload.The other face of the coin is the post-data section. Because the objects in this section are mostly random access operations, the completion can require more time than the data section itself; even if the size of the resulting objects is smaller than the table's data. This happens because the unavoidable sort operations are CPU and memory bound. The parallel restore gives some advantage, but as seen in 10.2 each loop's process is single threaded.

Setting up an emergency postgresql.conf file can speed up the restore, reducing the time up to 40% than the production's configuration. What it follows assumes the production's database is lost and the data is restored from a custom format's backup.

shared_buffers

When reloading the data from the dump, the database performs a so called bulk load operation. The PostgreSQL's memory manager have a subroutine which protects the shared segment from the block eviction caused by IO intensive operations. It's then very likely the ring buffer strategy will be triggered by the restore, sticking the IO in a small 4 MB buffer protecting the rest of the memory. A big shared buffer it can cache the data pages when in production but becomes useless when restoring. A smaller shared buffer, enough to hold the IO from the restore processes will result in more memory available for the backends when processing the post-data section. There's no fixed rule for the sizing. A gross approximation could be 10 MB for each parallel job with a minimum cap of 512 MB.wal_level

The wal_level parameter sets the level of redo informations stored in the WAL segments. By default is set to minimal, enabling the xlog skip. Having the database in with a standby, or simply using the point in time recovery as alternate backup strategy requires the parameter to be set to archive or hot_standby. If this is the case and you have a PITR or standby to failover, stop reading this book and act immediately. Restoring from a physical backup is several time faster than a logical restore. If you have lost the standby or PITR snapshot then before starting the reload the wal_level must be set to minimal.fsync

Turning off fsync can improve massively the restore's speed. Having this parameter turned off is not safe, unless the cache is have the backup battery to prevent data loss in case of power failure. However, even without the battery at restore time having the fsync off is not critical. After all the database is lost, what else can happen?checkpoint_segments, checkpoint_timeout

The checkpoint is a vital event in the database activity. When occurs all the pages not yet written to the data files are synced to disk. This in the restore context is a disturbance. Increasing the checkpoint segments and the timeout to the maximum allowed values will avoid any extra IO. In any case the dirtied blocks will be written on disk when the buffer manager will need to free some space.autovacuum

There's no point in having vacuumed the tables after a complete reload. Unfortunately autovacuum does not know if a table is being restored. When the limit for the updated tuples is recognised the daemon starts a new process wasting precious CPU cycles. Turning off temporarily the setting will let the backends to stay focused on the main goal. The data restore.max_connections

Limiting the max connections to number of restore jobs is a good idea. It's ok also giving a slight headroom for one or two connections, just in case there's need to log in and check the database status. This way the available memory can be shared efficiently between the backends.maintenance_work_memory

This parameter affects the index builds which are stored in the restore's post-data section. Low values will results in the backends sorting on disk and slowing down the entire process. Higher values will keep the index build in memory with great speed gain. The value should be carefully sized keeping in mind the memory available on the system. This value should be reduced by a 20% if the total ram is up to 10 GB and by 10% if bigger. This reduction is needed to consider the memory consumed by the operating system and the other processes. From the remaining ram must be subtracted the shared_buffer's memory. The remaining value must be divided by the expected backends to perform the restore. For example if we have a system with 26GB a shared_buffer of 2 GB and 10 parallel jobs to execute the restore, the maintenance_work_mem is 2.14 GB.26 - 10% = 23.4 23.4 - 2 = 21.4 21.4 / 10 = 2.14Ignoring this recommendation can trigger the swap usage resulting in a slower restore process.

Get DBA advice

The database administration is weird. It's very difficult to explain what a DBA does. It's a job where the statement ``failure is not an option" is the rule number zero. A DBA usually works in antisocial hours, with a very limited time window. A DBA holds a lot on the shoulders and that's the reason why those people can seem hostile or living in their own world.Despite the strange reputation, a database expert is an incredible resource for building up efficient and scalable designs. Nowadays is very simple to set up a PostgreSQL instance. Even with the default configuration parameter the system is so efficient that basically doesn't show any problem when running at low or medium load. If this, at first sight, seems a fantastic feature actually is a really bad thing. Any mistake at design level is hidden and when the problem sooner or later appears is too late.

This final advice is probably the most important of the entire chapter. If you have a DBA don't be shy. Ask for any suggestion, even if the solution seems obvious or if the task is small. The database layer is a completely different universe. A small mistake can result in a very big problem.

Of course if there's no DBA, that's bad. Never sail without a compass. Never start a database project without an expert. A professional to take care of the most important part, the foundations.

If this is the case, ask your employer to hire a PostgreSQL DBA as soon as possible.

Saturday, 13 September 2014

Chapter 11 - A couple of things to know before start coding...

This is almost the entire chapter 11. I'm still writing the final section, I'd like to put into a separate post though. I've also almost finished the restore's performance. After this the book is complete. I will start a review to make it a decent writing before publishing onto lulu.com and amazon kindle.

Mastering the SQL is a slow and difficult process and requires some sort of empathy with the DBMS. Asking for advice to the database administrator is a good idea to get introduced in the the database mind. Having a few words with the DBA is a good idea in any case though.

Forgetting about the database design is like building a skyscraper from the top. If unfortunately the project is successful there are good chances to see it crumbling down instead of making money.

In particular one of the PostgreSQL's features, the MVCC, is completely ignored at design time. In 7.6 it's explained how is implemented and what are the risks when designing a data model. It doesn't matter if the database is simple or the project is small. Nobody knows how successful could be a new idea. Having a robust design will make the project to scale properly.

A similar approach can be used for the column names, making the data type immediately recognisable.

A query like this have many issues .

I'm not sure amazon permits to sell books for free I'll find a solution anyway.

A couple of things to know before start coding...

This chapter is completely different from the rest of the book. It's dedicated to the developers. PostgreSQL is a fantastic infrastructure for building powerful and scalable applications. In order to use al its potential there are some things to consider. In particular if coming from other DBMS there are subtle caveats that can make the difference between a magnificent success or a miserable failure.SQL is your friend

Recently the rise of the NOSQL approach,has shown more than ever how SQL is a fundamental requirement for managing the data efficiently. All the shortcuts, like the ORMs or the SQL engines implemented over the NOSQL systems, sooner or later will show their limits. Despite the bad reputation around it, the SQL language is very simple to understand. Purposely built with few simple English words is a powerful tool set for accessing, managing or defining the data model. Unfortunately this simplicity have a cost. The language must be parsed and converted into the database structure and sometimes there is a misunderstanding between what the developer wants and what the database understands.Mastering the SQL is a slow and difficult process and requires some sort of empathy with the DBMS. Asking for advice to the database administrator is a good idea to get introduced in the the database mind. Having a few words with the DBA is a good idea in any case though.

Design comes first

One of the worst mistakes when building an application is to forget about the foundation, the database. With the rise of the ORM this is happening more frequently than it could be expected. Sometimes the database itself is considered as storage, one of the biggest mistake possible.Forgetting about the database design is like building a skyscraper from the top. If unfortunately the project is successful there are good chances to see it crumbling down instead of making money.

In particular one of the PostgreSQL's features, the MVCC, is completely ignored at design time. In 7.6 it's explained how is implemented and what are the risks when designing a data model. It doesn't matter if the database is simple or the project is small. Nobody knows how successful could be a new idea. Having a robust design will make the project to scale properly.

Clean coding

One of the the first things a developer learns is how to write formatted code. The purpose of having clean code is double. It simplifies the code's reading to the other developers and improves the code management when, for example, is changed months after the writing. In this good practice the SQL seems to be an exception. Is quite common to find long queries written all lowercase, on one single line with keywords used as identifier. Trying just to understand what such query does is a nightmare. What follow is a list of good practice for writing decent SQL and avoid a massive headache to your DBA. If you don't have a DBA see 11.4.The identifier's name

Any DBMS have its way of managing the identifiers. PostgreSQL transforms the identifier's name in lowercase. This doesn't work very well with the camel case, however it's still possible to mix upper and lower case letters enclosing the identifier name between double quotes. This makes the code difficult to read and to maintain. Using the underscores in the old fashion way it makes things simpler.Self explaining schema

When a database structure becomes complex is very difficult to say what is what and how it relates with the other objects. A design diagram or a data dictionary can help. But they can be outdated or maybe are not generally accessible. Adopting a simple prefix to add to the relation's name will give an immediate outlook of the object's kind.| Object | Prefix |

|---|---|

| Table | t_ |

| View | v_ |

| Btree Index | idx_bt_ |

| GiST Index | idx_gst_ |

| GIN Index | idx_gin_ |

| Unique index | u_idx_ |

| Primary key | pk_ |

| Foreign key | fk_ |

| Check | chk_ |

| Unique key | uk_ |

| Type | ty_ |

| Sql function | fn_sql_ |

| PlPgsql function | fn_plpg_ |

| PlPython function | fn_plpy_ |

| PlPerl function | fn_plpr_ |

| Trigger | trg_ |

| rule | rul_ |

A similar approach can be used for the column names, making the data type immediately recognisable.

| Type | Prefix |

|---|---|

| Character | c_ |

| Character varying | v_ |

| Integer | i_ |

| Text | t_ |

| Bytea | by_ |

| Numeric | n_ |

| Timestamp | ts_ |

| Date | d_ |

| Double precision | dp_ |

| Hstore | hs_ |

| Custom data type | ty_ |

Query formatting

Having a properly formatted query helps to understand which objects are involved in the data retrieval and the relations between them. Even with a very simple query a careless writing can make it very difficult to understand.A query like this have many issues .

- using lowercase keywords it makes difficult to spot them

- the wildcard * hides which columns are effectively needed; it retrieves all the columns consuming more bandwidth than required; it prevents the index only scans

- the meaningless aliases like a and b it make difficult to understand which relations are involved.

- writing a statement with no indenting it makes difficult to understand the query's logic.

- All SQL keywords should be in upper case

- All the identifiers and kewrords should be grouped by line break and indented at the same level

- In the SELECT list specify all and only the columns required by the query

- Avoid the auto join in order to make clear the relation's logic

- Adopt meaningful aliases

Sunday, 7 September 2014

Chapter 6 final parts. Foreign, check and null constraints

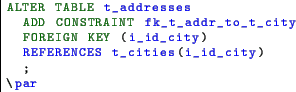

Foreign keys

A foreign key is a constraint enforced using the values another table's field. The classical

example is the tables storing the addresses and cities. We can store the addresses with the city

field, inline.

Being the city a duplicated value over many addresses, this will cause the table bloat by storing long strings, duplicated many and many times, alongside with the the address. Defining a table with the cities and referencing the city id in the addresses table will result in a smaller row size.

When dealing with referencing data, the main concern is the data consistency between the tables. In our example, putting an invalid identifier for the city in the t_addresses table will result missing data when joining. The same result will happen if for any reason the city identifier in the t_cities table is updated.

The foreign keys are designed to enforce the referential integrity. In our example will we'll enforce a strong relationship between the tables.

The key is enforced in two ways. When a row with an invalid i_id_city hits the table t_addresses the key is violated and the transaction aborts. Deleting a city from the t_cities table which id is referenced in the t_addresses, will violate the key. The same will updating a i_id_city referenced in the t_addresses.

The enforcement is performed via triggers. The pg_dump or pg_restore option -disable-trigger will permit the the data restore with the schema already in place. For more informations take a look to 9 and 10.

The FOREIGN KEYS have been enriched with an handful of options which make them very flexible. The referenced table can drive actions on the referencing using the two options ON DELETE and ON UPDATE. By default the behaviour is to take NO ACTION if there are referencing rows until the end of the transaction. This is useful if the key check should be deferred to the end of the transaction. The other two actions are the RESTRICT which does not allow the deferring and the CASCADE which cascade the action to the referred rows.

If we want our foreign key restrict the delete with no deferring and cascade any update, here's the DDL.

Another very useful clause available with the foreign and check constraints is the NOT VALID. When the constraint is created with NOT VALID, the initial check is skipped making the constraint creation instantaneous. This is acceptable if the actual data is consistent. The constraint is then enforced for all the new data. The invalid constraint can be validated later with the command VALIDATE CONSTRAINT.

Check constraints

A check constraint is a user defined check to enforce specific condtions on the rows.

The definition can be a condition or a used defined function. In this case the function must return

a boolean value. As for the foreign keys, the check accepts the NOT VALID clause to speed up the

creation.

The check is satisfied if the condition returns true or NULL. This behaviour can produce unpredictable results if not fully understood. An example will help to clarify. Let's create a CHECK constraint on the v_address table for enforcing a the presence of a value. Even with the check in place the insert without the address completes successfully.

This is possible because the v_address does not have a default value and accepts the NULL values. The check constraint is violated if, for example we'll try to update the v_address with the empty string.

Our check constraint will work as expected if we set for the v_address field a fallback default value.

Please note the existing rows are not affected by the default value change.

The message for the update and the insert is exactly the same because PostgreSQL attempts to create a new row in both cases. When the constraint fails the transaction is rolled back leaving the dead row in place. We'll take a better look to the MVCC in 7.6.

Not null

For people approaching the database universe the NULL value can be quite confusing. A NULL value is an empty object without any type or meaning. Actually when a field is NULL it doesn't consumes physical space. By default when defining a field this is NULLable. Those fields are quite useful to omit some columns, for example, at insert time.The NULLable fields can be enforced with an unique constraint as the NULL are not considered as duplicates. When dealing with the NULL it's important to remind the NULL acts like the mathematical zero. When evaluating an expression with a NULL, the entire expression becomes NULL.

The NOT NULL is a column constraint which forbids the presence of NULL in the affected field. Combining a NOT NULL with a unique constraint is exactly like having enforced a PRIMARY KEY. When altering a field the table is scanned for validation and the constraint's creation is aborted if any NULL value is present on the affected field.

For example, if we want to add the NOT NULL constraint to the field v_address in the t_addresses table the command is just.

In this case the alter fails because the column v_address contains NULL values from the example seen in 6.4. The fix can be performed in a single query using the coalesce function. This function returns the first not null value from the left. This way is possible to change the v_address on the fly to a fixed placeholder .

Adding new columns with NULL is quick. PostgreSQL simply adds the new attribute in the system catalogue and manages the new tuple structure considering the new field as empty space. Adding a field with NOT NULL requires the presence of the DEFAULT value as well. This is an operation to consider carefully when dealing with big amount of data. This way the table will be locked in exclusive mode and a complete relation's rewrite will happen. A far better way is to add a NOT NULL field is to add at first as NULLable field. A second alter will add the default value to have the new rows correctly set. An update will then fix the NULL values without locking the table. Finally the NOT NULL could be enforced without hassle.

Monday, 25 August 2014

The missing chapter 6 part 1 and two, data integrity

I've started the sixth chapter, the one on the data integrity I've forgotten. There are the first two parts alongside with the introduction. I've also updated the book on slideshare with the new cover and the last incomplete chapter for the developers. The beautiful cover is made by Chiaretta & Bon. Kudos and many thanks.

I've also uploaded the latex sources on github for anybody to fork and review my crappy english. My former colleague and friend Craig Barnes already started reviewing the tex files, many thanks for the priceless help.

Here's the github repository url : https://github.com/the4thdoctor/pgdba_books

Data integrity

There's just one thing worse than losing the database. Having the data set full of rubbish. The data integrity has been part of PostgreSQL since the beginning. It offers various levels of strength ensuring the data is clean and consistent. In this chapter we'll have a brief look to the various constraints available. The PostgreSQL's constraints can be grouped in two kind. The table constraints and the column constraints. The table constraints are defined on the table's definition after the field's list. The column constraints appear in the field's definition after the data type. Usually for the primary keys and the unique keys the definition is written as table constraint.The constraint applies the enforcement to any table's row without exclusion. When creating a table constraint on a fully populated table the data is validated first. Any validation error aborts the constraint creation. However, the foreign keys and check constraints accept the clause NOT VALID. With this clause the database assumes the data is valid and skips the validation. The cration is almost immediate. The new constraint is then enforced only for the new data. When using this option the data must be consistent.

Primary keys

A primary key is the unique row identifier. Having this constraint enforced ensures the row can be addressed directly using the key value. A primary key can be enforced on a single or multi column. The data aspect must be unique with the strictest level. That means the NULL values are not permitted in columns participating to the primary key. When creating a primary key this implicitly adds a new unique index on the affected fields. In order to avoid the exclusive lock on the affected table the unique index can be built before the primary using the CONCURRENTLY clause and then used in the primary key definition as shown in 8.3. Using the primary key is the fastest way to access the table's contents.There is the primary key definition as table and column constraint.

With the table's constraint definition is possible to specify the constraint name and to have a multi column constraint. When writing a multi column constraint the participating columns should be listed separate by commas.

The most common primary key implementation, and probably the best, is to have a serial column as primary key. A serial field is short for integer NOT NULL which default value is associated to the nextval for an auto generated sequence. Because the sequence have its upper limit to the bigint upper limit, this ensures the data does not wraps in the table's lifetime. In the case the primary key is expected to reach the value of 2,147,483,647 the type of choice should be bigserial rather serial. This will create the primary key's field as bigint which upper limit is 9,223,372,036,854,775,807.

However it's still possible to alter the field later in order to match the new requirements. Because changing the data type requires a complete table's rewrite, any view referencing the affected column will abort the change.

Here's the t_data's type change output with the client message level set to debug3.

Dealing with a big amount of data presents also the problem to have enough space for fitting twice of the original table plus the downtime caused by the exclusive lock on the affected relation. A far better approach is to add a new bigint NULLable column without default value. Setting up a trigger for the inserts will keep in sync the new values with the original primary key. Then an update procedure will set the value for the rows. This should run in small batches to avoid to overfill the pg_xlog directory with long running transactions. When everything is in place the new column could then become NOT NULL and a unique index will finally enforce the uniqueness for the new field.

The primary key can then be dropped and recreated using the new unique index. This is permitted only if there's no foreign key referencing the field. In this case a multi drop and create statement is required. The final cleanup should include the trigger's drop and the old primary key removal. Any view using the old primary key should be rebuilt before the drop.

Unique keys

The unique keys are similar to the primary keys. They enforce the uniqueness using an implicit index but they allow the presence of NULL values. Their usage is for enforcing uniqueness on columns not used as primary key. Similar to the primary key the unique constraints are based on a unique index. In fact there's little difference between the unique index and the unique key except the presence of the latter in the system table pg_constraint.Sunday, 17 August 2014

Chapter 10 part 2 - The binary formats

The three binary formats supported by pg_dump are the custom, the directory and the tar format.

The first two can be accessed randomly by the restore program and have the parallel restore

support, being the best choice for having a flexible and reliable restore. Before the the 9.3 the

only format supporting the parallel restore was the custom. With this version the directory

format accepts the -j switch. This feature, combined with the parallel dump seen in

9.3 is a massive improvement for saving big amount of data. The tar format does

have the limit of 12 GB in the archive's file size and doesn't offer the parallel restore nor the

selective restore.

The custom format is a binary archive with a table of contents pointing the various archive sections. The directory format is a directory which name is the value provided with the -f switch. The directory contents are a toc.dat file, where the table of contents and the schema are stored. For each table there is a gzip file which name is a number corresponding to the toc entry for the saved relation. Those files store the data restore for the relation.

The restore from the binary formats requires the pg_restore usage. Because almost all the pg_dump's switches are supported by pg_restore we'll not repeat the look out. Take a look to 9.1 for the switch meanings. Anyway this is the pg_restore's help output.

The the custom and directory formats show their power when restoring on a database connection in a multi core system. Using the -j switch it's possible to specify the number of parallel jobs for the data and the post data section. This can improve massively the recovery time, running the most time consuming actions in multiple processes.

The word parallel can be confusing in some way. PostgreSQL does not supports multithreading. That means each backend process will use just only one cpu. In this context, each job take care of a different area of the restore's table of contents, The TOC is split in many queues with a fixed object list to process. For example one queue will contain the data restoration for a table, and the relation's indices and constraints.

The switch -section offers a fine grain control on which section of the archived data will be restored. In a custom and directory format there are three distinct sections.

We'll now will see how to restore the database seen in 10.1 in the same two steps approach, using the custom format.

Let's start with a complete database dump using the custom format.

We'll then restore just the schema using the following command.

The second restore's step is the data load. In the example seen in 10.1 we used the pg_dump with -disable-triggers switch in order to avoid failures caused by constraint violation. With the custom format the switch is used at restore time.

We'll first restore the pre-data section10.1.

Loading the data contents without indices maximise the speed. The constraint and index build with the data already in place results in a faster build and a fresh index without any bloat.

The custom format is a binary archive with a table of contents pointing the various archive sections. The directory format is a directory which name is the value provided with the -f switch. The directory contents are a toc.dat file, where the table of contents and the schema are stored. For each table there is a gzip file which name is a number corresponding to the toc entry for the saved relation. Those files store the data restore for the relation.

The restore from the binary formats requires the pg_restore usage. Because almost all the pg_dump's switches are supported by pg_restore we'll not repeat the look out. Take a look to 9.1 for the switch meanings. Anyway this is the pg_restore's help output.

pg_restore restores a PostgreSQL database from an archive created by pg_dump.

Usage:

pg_restore [OPTION]... [FILE]

General options:

-d, --dbname=NAME connect to database name

-f, --file=FILENAME output file name

-F, --format=c|d|t backup file format (should be automatic)

-l, --list print summarized TOC of the archive

-v, --verbose verbose mode

-V, --version output version information, then exit

-?, --help show this help, then exit

Options controlling the restore:

-a, --data-only restore only the data, no schema

-c, --clean clean (drop) database objects before recreating

-C, --create create the target database

-e, --exit-on-error exit on error, default is to continue

-I, --index=NAME restore named index

-j, --jobs=NUM use this many parallel jobs to restore

-L, --use-list=FILENAME use table of contents from this file for

selecting/ordering output

-n, --schema=NAME restore only objects in this schema

-O, --no-owner skip restoration of object ownership

-P, --function=NAME(args) restore named function

-s, --schema-only restore only the schema, no data

-S, --superuser=NAME superuser user name to use for disabling triggers

-t, --table=NAME restore named table(s)

-T, --trigger=NAME restore named trigger

-x, --no-privileges skip restoration of access privileges (grant/revoke)

-1, --single-transaction restore as a single transaction

--disable-triggers disable triggers during data-only restore

--no-data-for-failed-tables do not restore data of tables that could not be

created

--no-security-labels do not restore security labels

--no-tablespaces do not restore tablespace assignments

--section=SECTION restore named section (pre-data, data, or post-data)

--use-set-session-authorization

use SET SESSION AUTHORIZATION commands instead of

ALTER OWNER commands to set ownership

Connection options:

-h, --host=HOSTNAME database server host or socket directory

-p, --port=PORT database server port number

-U, --username=NAME connect as specified database user

-w, --no-password never prompt for password

-W, --password force password prompt (should happen automatically)

--role=ROLENAME do SET ROLE before restore

If no input file name is supplied, then standard input is used.

Report bugs to <pgsql-bugs@postgresql.org>.

If the database connection is omitted pg_restore sends the output to the standard output. The

switch -f sends the output to a filename though. This is very useful if we want to check the

original dump file is readable, executing a restore onto the /dev/null device.

The the custom and directory formats show their power when restoring on a database connection in a multi core system. Using the -j switch it's possible to specify the number of parallel jobs for the data and the post data section. This can improve massively the recovery time, running the most time consuming actions in multiple processes.

The word parallel can be confusing in some way. PostgreSQL does not supports multithreading. That means each backend process will use just only one cpu. In this context, each job take care of a different area of the restore's table of contents, The TOC is split in many queues with a fixed object list to process. For example one queue will contain the data restoration for a table, and the relation's indices and constraints.

The switch -section offers a fine grain control on which section of the archived data will be restored. In a custom and directory format there are three distinct sections.

- pre-data This section restores only the schema definitions not affecting the speed and reliability of the data restore. e.g. table's DDL, functions creation, extensions, etc.

- data The data restore itself, by default saved as COPY statements to speed up the process

- post-data This section runs the restore for all the objects enforcing the data integrity, like the primary and foreign keys, triggers and the indices which presence during the restore slow down the data reload massively.

We'll now will see how to restore the database seen in 10.1 in the same two steps approach, using the custom format.

Let's start with a complete database dump using the custom format.

postgres@tardis:~/dump$ pg_dump -Fc -f db_addr.dmp db_addr pg_dump: reading schemas pg_dump: reading user-defined tables pg_dump: reading extensions pg_dump: reading user-defined functions pg_dump: reading user-defined types pg_dump: reading procedural languages pg_dump: reading user-defined aggregate functions pg_dump: reading user-defined operators pg_dump: reading user-defined operator classes pg_dump: reading user-defined operator families pg_dump: reading user-defined text search parsers pg_dump: reading user-defined text search templates pg_dump: reading user-defined text search dictionaries pg_dump: reading user-defined text search configurations pg_dump: reading user-defined foreign-data wrappers pg_dump: reading user-defined foreign servers pg_dump: reading default privileges pg_dump: reading user-defined collations pg_dump: reading user-defined conversions pg_dump: reading type casts pg_dump: reading table inheritance information pg_dump: reading event triggers pg_dump: finding extension members pg_dump: finding inheritance relationships pg_dump: reading column info for interesting tables pg_dump: finding the columns and types of table "t_address" pg_dump: finding default expressions of table "t_address" pg_dump: finding the columns and types of table "t_city" pg_dump: finding default expressions of table "t_city" pg_dump: flagging inherited columns in subtables pg_dump: reading indexes pg_dump: reading indexes for table "t_address" pg_dump: reading indexes for table "t_city" pg_dump: reading constraints pg_dump: reading foreign key constraints for table "t_address" pg_dump: reading foreign key constraints for table "t_city" pg_dump: reading triggers pg_dump: reading triggers for table "t_address" pg_dump: reading triggers for table "t_city" pg_dump: reading rewrite rules pg_dump: reading large objects pg_dump: reading dependency data pg_dump: saving encoding = UTF8 pg_dump: saving standard_conforming_strings = on pg_dump: saving database definition pg_dump: dumping contents of table t_address pg_dump: dumping contents of table t_cityWe'll use a second database for the restore.

We'll then restore just the schema using the following command.

postgres@tardis:~/dump$ pg_restore -v -s -d db_addr_restore_bin db_addr.dmp pg_restore: connecting to database for restore pg_restore: creating SCHEMA public pg_restore: creating COMMENT SCHEMA public pg_restore: creating EXTENSION plpgsql pg_restore: creating COMMENT EXTENSION plpgsql pg_restore: creating TABLE t_address pg_restore: creating SEQUENCE t_address_i_id_addr_seq pg_restore: creating SEQUENCE OWNED BY t_address_i_id_addr_seq pg_restore: creating TABLE t_city pg_restore: creating SEQUENCE t_city_i_id_city_seq pg_restore: creating SEQUENCE OWNED BY t_city_i_id_city_seq pg_restore: creating DEFAULT i_id_addr pg_restore: creating DEFAULT i_id_city pg_restore: creating CONSTRAINT pk_i_id_city pg_restore: creating CONSTRAINT pk_id_address pg_restore: creating FK CONSTRAINT fk_t_city_i_id_city pg_restore: setting owner and privileges for DATABASE db_addr pg_restore: setting owner and privileges for SCHEMA public pg_restore: setting owner and privileges for COMMENT SCHEMA public pg_restore: setting owner and privileges for ACL public pg_restore: setting owner and privileges for EXTENSION plpgsql pg_restore: setting owner and privileges for COMMENT EXTENSION plpgsql pg_restore: setting owner and privileges for TABLE t_address pg_restore: setting owner and privileges for SEQUENCE t_address_i_id_addr_seq pg_restore: setting owner and privileges for SEQUENCE OWNED BY t_address_i_id_addr_seq pg_restore: setting owner and privileges for TABLE t_city pg_restore: setting owner and privileges for SEQUENCE t_city_i_id_city_seq pg_restore: setting owner and privileges for SEQUENCE OWNED BY t_city_i_id_city_seq pg_restore: setting owner and privileges for DEFAULT i_id_addr pg_restore: setting owner and privileges for DEFAULT i_id_city pg_restore: setting owner and privileges for CONSTRAINT pk_i_id_city pg_restore: setting owner and privileges for CONSTRAINT pk_id_address pg_restore: setting owner and privileges for FK CONSTRAINT fk_t_city_i_id_cityThe dump file is specified as last parameter. The -d switch tells pg_restore which database to connect for the restore. By default the postgres user usually connects using the ident operating system daemon or the trust authentication method, when connected as local. That's the reason why in this example there's no need of specifying the username or enter the password.

The second restore's step is the data load. In the example seen in 10.1 we used the pg_dump with -disable-triggers switch in order to avoid failures caused by constraint violation. With the custom format the switch is used at restore time.

postgres@tardis:~/dump$ pg_restore --disable-triggers -v -a -d db_addr_restore_bin db_addr.dmp pg_restore: connecting to database for restore pg_restore: disabling triggers for t_address pg_restore: processing data for table "t_address" pg_restore: enabling triggers for t_address pg_restore: executing SEQUENCE SET t_address_i_id_addr_seq pg_restore: disabling triggers for t_city pg_restore: processing data for table "t_city" pg_restore: enabling triggers for t_city pg_restore: executing SEQUENCE SET t_city_i_id_city_seq pg_restore: setting owner and privileges for TABLE DATA t_address pg_restore: setting owner and privileges for SEQUENCE SET t_address_i_id_addr_seq pg_restore: setting owner and privileges for TABLE DATA t_city pg_restore: setting owner and privileges for SEQUENCE SET t_city_i_id_city_seqHowever, this approach does not prevent the slowness caused by the indices when reloading the data. If a restore with multiple steps is required (e.g. creating the database schema and check all the relations are in place before starting) the section switch is a better choice. Let's see how it works with the example seen before.

We'll first restore the pre-data section10.1.

postgres@tardis:~/dump$ pg_restore --section=pre-data -v -d db_addr_restore_bin db_addr.dmp pg_restore: connecting to database for restore pg_restore: creating SCHEMA public pg_restore: creating COMMENT SCHEMA public pg_restore: creating EXTENSION plpgsql pg_restore: creating COMMENT EXTENSION plpgsql pg_restore: creating TABLE t_address pg_restore: creating SEQUENCE t_address_i_id_addr_seq pg_restore: creating SEQUENCE OWNED BY t_address_i_id_addr_seq pg_restore: creating TABLE t_city pg_restore: creating SEQUENCE t_city_i_id_city_seq pg_restore: creating SEQUENCE OWNED BY t_city_i_id_city_seq pg_restore: creating DEFAULT i_id_addr pg_restore: creating DEFAULT i_id_city pg_restore: setting owner and privileges for DATABASE db_addr pg_restore: setting owner and privileges for SCHEMA public pg_restore: setting owner and privileges for COMMENT SCHEMA public pg_restore: setting owner and privileges for ACL public pg_restore: setting owner and privileges for EXTENSION plpgsql pg_restore: setting owner and privileges for COMMENT EXTENSION plpgsql pg_restore: setting owner and privileges for TABLE t_address pg_restore: setting owner and privileges for SEQUENCE t_address_i_id_addr_seq pg_restore: setting owner and privileges for SEQUENCE OWNED BY t_address_i_id_addr_seq pg_restore: setting owner and privileges for TABLE t_city pg_restore: setting owner and privileges for SEQUENCE t_city_i_id_city_seq pg_restore: setting owner and privileges for SEQUENCE OWNED BY t_city_i_id_city_seq pg_restore: setting owner and privileges for DEFAULT i_id_addr pg_restore: setting owner and privileges for DEFAULT i_id_cityAgain the pg_restore loads the objects with the ownership and privileges. What's missing is the constraints creation. The second step is the data section's load.

postgres@tardis:~/dump$ pg_restore --section=data -v -d db_addr_restore_bin db_addr.dmp pg_restore: connecting to database for restore pg_restore: implied data-only restore pg_restore: processing data for table "t_address" pg_restore: executing SEQUENCE SET t_address_i_id_addr_seq pg_restore: processing data for table "t_city" pg_restore: executing SEQUENCE SET t_city_i_id_city_seq pg_restore: setting owner and privileges for TABLE DATA t_address pg_restore: setting owner and privileges for SEQUENCE SET t_address_i_id_addr_seq pg_restore: setting owner and privileges for TABLE DATA t_city pg_restore: setting owner and privileges for SEQUENCE SET t_city_i_id_city_seqThis section simply loads the table's data and sets the sequence values. Apart for the ownership no further action is performed. Finally we'll run the post-data section.

postgres@tardis:~/dump$ pg_restore --section=post-data -v -d db_addr_restore_bin db_addr.dmp pg_restore: connecting to database for restore pg_restore: creating CONSTRAINT pk_i_id_city pg_restore: creating CONSTRAINT pk_id_address pg_restore: creating FK CONSTRAINT fk_t_city_i_id_city pg_restore: setting owner and privileges for CONSTRAINT pk_i_id_city pg_restore: setting owner and privileges for CONSTRAINT pk_id_address pg_restore: setting owner and privileges for FK CONSTRAINT fk_t_city_i_id_cityWith this run the constrains (and eventually all the indices) are created in the best approach possible when dealing with the bulk data processing.

Loading the data contents without indices maximise the speed. The constraint and index build with the data already in place results in a faster build and a fresh index without any bloat.

Monday, 11 August 2014

Pg chamelion and dba reactions

Finally I've found some time to complete a working prototype of the new library pg_chamelion.

The github repos is here, https://github.com/the4thdoctor/pg_chameleon, please fork it if you want to debug or give me some feedback.

The library exports the metadata from mysql using sqlalchemy. The informations are used by the PostgreSQL library to rebuild the schema in a PostgreSQL database. Finally the data is dumped to multiple files in CSV format and reloaded into PostgreSQL using the copy_expert command.

The MySQL data is exported in the CSV format using a custom SQL query with the MySQL's (not standard) syntax for the string concatenation.

The replace function is also used to have the double quotes escaped.

The copy into PostgreSQL uses the psycopg2's copy_expert cursor's method, quick and very efficient.

In the future I'll change the config file format to YAML because is simpler to manage and to write.

I'm also planning to write an alternative library to get the metadata from mysql.

I think in general using any ORM is a bad choice. The performance tuning of their horrible queries, when the amount of data becomes serious, is a pain in the ass.

IMHO their usage should be limited for toy sized databases or for quick and dirt proof of concept.

And if you think I'm rude then take a look to http://dbareactions.com/ .

The github repos is here, https://github.com/the4thdoctor/pg_chameleon, please fork it if you want to debug or give me some feedback.

The library exports the metadata from mysql using sqlalchemy. The informations are used by the PostgreSQL library to rebuild the schema in a PostgreSQL database. Finally the data is dumped to multiple files in CSV format and reloaded into PostgreSQL using the copy_expert command.

The MySQL data is exported in the CSV format using a custom SQL query with the MySQL's (not standard) syntax for the string concatenation.

The replace function is also used to have the double quotes escaped.

The copy into PostgreSQL uses the psycopg2's copy_expert cursor's method, quick and very efficient.

In the future I'll change the config file format to YAML because is simpler to manage and to write.

I'm also planning to write an alternative library to get the metadata from mysql.

I think in general using any ORM is a bad choice. The performance tuning of their horrible queries, when the amount of data becomes serious, is a pain in the ass.

IMHO their usage should be limited for toy sized databases or for quick and dirt proof of concept.

And if you think I'm rude then take a look to http://dbareactions.com/ .

Wednesday, 6 August 2014

Chapter 10 intro and part 1. restoring with plain format

Yes I know, the previous chapter was number 8, this is number 10.

The reason why is I realised I forgot completely to talk about the constraints so I've added a data integrity chapter just after the the logical layout and the numbers shifted.

Anyway the pdf on slideshare is updated with the latest version.

The backup format determines which program will be used for the restore. We'll take a look first on the restore from plain format. Later we'll see how the custom and directory formats are the best choice, giving flexibility and performance at restore time. Finally we'll see how to improve database performances at restore time sacrificing temporarily the reliability.

This format have few advantages. For example it's possible to edit the statement using a common text editor. This of course if the dump is reasonably small. Even loading a file with vim when its size is measured in gigabytes becomes a stressful experience.

The data is saved by default in the copy format. This guarantee the best performances at load time. It's still possible to save the data using the inserts but this will result in a very slow restore, having each statement to be parsed and planned.

Saving the schema and data in two separate files requires also an extra care at dump time. Restoring from a data only plain backup will very likely result in tables with foreign keys having their data missing because the key violation.

In order to avoid the problem, at backup time and when running a data only backup, the switch -disable-triggers should be used. This will emit the DISABLE TRIGGER statements before the data load and the ENABLE TRIGGER after the data is consistently restored. The following example will show a dump reload session with the separate schema and data save.

Let's create the simple data structure. We'll create a new database with a table for the addresses and another one for the cities. Between the cities an the addresses a foreign key will enforce the relation on the id city column.

Now let's put some data into it.

We'll now execute two dumps one for the schema and one for the data without the disable triggers switch.

Looking to the schema dump it's quite obvious what it does. All the DDL are saved in the correct order to restore the same database structure .

The data is then saved by pg_dump in the correct order for having the referential integrity guaranteed. In our very simple example the table t_city is dumped before the table t_address. This way the data will not violate the foreign key. In a scenario where a complex structure is dumped, this cannot be guaranteed. Let's run the same dump with the option -disable-trigger.

The copy statements in this case are enclosed by two extra statements for disabling and re enabling the triggers.

This way the FOREIGN KEY's triggers with any user defined trigger, will be disabled during the restore, ensuring the data will be safely stored. After the restoration the enable will restore any constraint enforcement.

The data saved in plain format is then restored using the command line client psql.

Let's then create a new database and restore it the saved dumps, first schema and then the data.

The reason why is I realised I forgot completely to talk about the constraints so I've added a data integrity chapter just after the the logical layout and the numbers shifted.

Anyway the pdf on slideshare is updated with the latest version.

Restore

There's little advantage in saving the data if the recover is not possible. In this chapter we'll take a look to the fastest and safest way to restore recover the saved dump.The backup format determines which program will be used for the restore. We'll take a look first on the restore from plain format. Later we'll see how the custom and directory formats are the best choice, giving flexibility and performance at restore time. Finally we'll see how to improve database performances at restore time sacrificing temporarily the reliability.

The plain format

As seen in 9 the pg_dump by default saves the entire database in plain format. This is an SQL script offering nothing but a straightforward reload strategy. Feeding the saved file into psql rebuilds completely the objects.This format have few advantages. For example it's possible to edit the statement using a common text editor. This of course if the dump is reasonably small. Even loading a file with vim when its size is measured in gigabytes becomes a stressful experience.

The data is saved by default in the copy format. This guarantee the best performances at load time. It's still possible to save the data using the inserts but this will result in a very slow restore, having each statement to be parsed and planned.

Saving the schema and data in two separate files requires also an extra care at dump time. Restoring from a data only plain backup will very likely result in tables with foreign keys having their data missing because the key violation.

In order to avoid the problem, at backup time and when running a data only backup, the switch -disable-triggers should be used. This will emit the DISABLE TRIGGER statements before the data load and the ENABLE TRIGGER after the data is consistently restored. The following example will show a dump reload session with the separate schema and data save.

Let's create the simple data structure. We'll create a new database with a table for the addresses and another one for the cities. Between the cities an the addresses a foreign key will enforce the relation on the id city column.

Now let's put some data into it.

We'll now execute two dumps one for the schema and one for the data without the disable triggers switch.

postgres@tardis:~/dmp$ pg_dump --schema-only db_addr > db_addr.schema.sql

postgres@tardis:~/dmp$ pg_dump --data-only db_addr > db_addr.data.sql

Looking to the schema dump it's quite obvious what it does. All the DDL are saved in the correct order to restore the same database structure .

The data is then saved by pg_dump in the correct order for having the referential integrity guaranteed. In our very simple example the table t_city is dumped before the table t_address. This way the data will not violate the foreign key. In a scenario where a complex structure is dumped, this cannot be guaranteed. Let's run the same dump with the option -disable-trigger.

postgres@tardis:~/dmp$ pg_dump --disable-triggers --data-only db_addr > db_addr.data.sql

The copy statements in this case are enclosed by two extra statements for disabling and re enabling the triggers.

This way the FOREIGN KEY's triggers with any user defined trigger, will be disabled during the restore, ensuring the data will be safely stored. After the restoration the enable will restore any constraint enforcement.

The data saved in plain format is then restored using the command line client psql.

Let's then create a new database and restore it the saved dumps, first schema and then the data.

Friday, 1 August 2014

Chapter 8's final two parts. pg_dumpall and the export's validation

pg_dumpall

The pg_dumpall program is mostly a wrapper for pg_dump. It's usage is mainly for dumping all the databases in the cluster. Having lesser options than pg_dump is also less flexible. However one option is absolutely useful and should be included in any backup plan to ensure a rapid recovery in case of disaster.The -globals-only option saves on the standard output all the cluster wide options like the tablespaces, the roles and the privileges. The passwords are dumped as well encrypted in md5 format. The dump format for this utility is only text. The best way to save the globals is to specify the -f option followed by the file name. This file can be loaded into an empty cluster to recreate the global objects. The tablespaces, if any present, must have the filesystem already in place before running the sql as PostgreSQL doesn't create the filesystem structure.

This example shows the program call and the contents of the output file.

postgres@tardis:~/dmp$ pg_dumpall --globals-only -f main_globals.sql postgres@tardis:~/dmp$ cat main_globals.sql -- -- PostgreSQL database cluster dump -- SET default_transaction_read_only = off; SET client_encoding = 'UTF8'; SET standard_conforming_strings = on; -- -- Roles -- CREATE ROLE postgres; ALTER ROLE postgres WITH SUPERUSER INHERIT CREATEROLE CREATEDB LOGIN REPLICATION; -- -- PostgreSQL database cluster dump complete -- postgres@tardis:~/dmp$

Backup validation

There's little advantage in having a backup if this is not restorable. The corruption danger is at various levels and unfortunately the problem appears only when the restore is needed.The corruption have many causes. If the dump is saved locally a damaged filesystem can hide the problem meanwhile the corrupted block is not read. Also the disk subsystem with undetected problems will result in a silent corruption. In order to limit this kind of problems the filesystem of choice should be a solid one with strong journaling support.

The disk subsystem should guarantee the data reliability rather the speed. Slow disks when backing up the data, in particular if in the compressed format don't limit the speed, being the cpu power the real bottleneck.

If the dump file is transferred over the network is a good idea to generate a md5 checksum to check the file integrity after the transfer.

All those measures don't give the security the backup is restorable. The only test capable to ensure the backup is good is a test restore on separate server. This can be a single test or a more structured check. Which strategy to adopt is determined by the amount of data, the time required for the restore and the backup schedule.

The general purpose databases, which size is measurable in hundreds of gigabytes, the restore can complete in few hours and the continuous test is feasible. For the VLDB, which size is measured in terabytes, the restore can take more than one day, in particular if there are big indices requiring expensive sort on disk for the build. In this scenario a weekly restore gives a good perception if there are potential problems with the saved data.

Sunday, 27 July 2014

Chapter 8 - part 3, under the bonnet. With a bonus

Snapshot exports

Since PostgreSQL 9.2 are supported the transaction's snapshot exports. A session with an open transaction, can export its consistent snapshot to any other session. The snapshot remains valid meanwhile the transaction is open. Using this functionality offers a way to run multiple backends on a consistent data set frozen in time. This feature resulted in the brilliant parallel export in the 9.3's pg_dump as described in 8.3.In the following example, let's consider the table created in 5.5. We'll first start an explicit transaction and then we'll export the current snapshot.

We are first starting a transaction with the REPEATABLE READ isolation level. The second statement exports the current snapshot using the function pg_export_snapshot(). Finally we are checking with a simple row count the table t_data have data inside.

We can now login with in a different session and delete all the rows from the t_data table.

With the table now empty let's import the snapshot exported by the first backend.

The function pg_export_snapshot saves the current snapshot returning the text string which identifies the snapshot. Passing the string to clients that want to import the snapshot gives to independent sessions a single consistent vision. The import is possible only until the end of the transaction that exported it. The export is useful only in the READ COMMITTED transactions, because the REPEATABLE READ and higher isolation levels use the same snapshot within their lifetime.

pg_dump under the bonnet

The pg_dump source code gives a very good picture of what exactly the backup software does. The process runs into with fixed transaction's isolation level accordingly with the server's version. The distinction is required because, becoming PostgreSQL more sophisticated at each major release, the isolation levels became more and more strict with their meanings.More informations about the transactions are in 5.7.

From PostgreSQL 9.1 the transaction serializable became a real serialisation. The transaction's behaviour offered by the serializable in the version up to 9.0 were assigned to the REPETABLE READ transaction's isolation, its real kind. The SERIALIZABLE transaction's isolation level is still used with DEFERRABLE option when pg_dump is executed with the option -serializable-deferrable as seen in 8.1.3. The switch have effect only on the remote server with version 9.1 and later. The transaction is also set to READ ONLY, when supported by the server, in order to limit the XID generation.

|

From the version 9.3 pg_dump supports the parallel dump using the feature seen in 5.7.1. As the snapshot export is supported from the version 9.2 this permit a parallel dump from the older major version if using the newer backup program. However, pg_dump accepts the option -no-synchronized-snapshots in order to dump in parallel jobs from the older versions. The data is not supposed to be consistent if there are read write transactions during this kind of export. To have a consistent export in this case all the transactions which modify the data must be stopped meanwhile the export is in progress.

When exporting in parallel the only permitted format is the directory. The pg_restore program since the version 9.3 supports also the directory format for the paralelle data restoration. The combination of the parallel jobs backup and the parallel jobs restore, can improve massively either the backup and the recovery speed in case of disaster.

Wednesday, 23 July 2014

Chapter 8 - part 2, performance tips

Performance tips

Despite the fact pg_dump doesn't affects the running queries, its strict transactional approach have some effects on the affected schema. Any alter schema is blocked until the backup's end. The vacuum efficiency is affected as well, because all the dead rows generated during the backup's run, cannot be reclaimed being potentially required by the backup's running transaction.There are some tips to improve the backup's speed.

Avoid remote backups

The pg_dump can connect to remote databases same like any other PostgreSQL client. It seems reasonable then to use the program installed on a centralised storage and to dump locally from the remote cluster.Unfortunately even using the compressed format, the entire database flows uncompressed and in clear, from the server to the remote pg_dump. The compression happens locally when the data is received.

This approach expose also a network security issue. If the environment is not trusted then the remote connection must happen on a secure channel. This add an extra overhead to the transfer and any failure on this layer will fail the entire backup.

A far better approach is to save locally the database, using the local connection if possible, and then copy the entire dump file using a secure transfer protocol like scp or sshfs.

Skip replicated tables

If the database is configured as logical slave in slony or londiste for example, backing up the replicated table's data is not important as the contents are re synchronised from the master when the node is attached to the replication system. The switch -exclude-table-data=TABLE is then useful for dumping the table's definition only without the contents.Check for slow cpu cores

PostgreSQL is a multitasking but not a multithreaded database system. Each backend is attached to just one cpu. The pg_dump opens one backend connection to the cluster in order to export the database objects. The pg_dump process receives the data flow from the backend and it saves performing also the optional compression. In this scenario the cpu power is critical in order to avoid a bottleneck. This could be helped using the parallel export offered by pg_dump from the version 9.3. The functionality is implemented via the snapshot exports. As this was introduced with PostgreSQL 9.2 the paralle export can happen only from this version and only if the output format is directory .Check for the available locks

PostgreSQL at various level uses the locks to ensure the data consistency at various levels. For example, when a table is read an access share lock on the relation is put in order to avoid any structure change. Any backend issuing an ALTER TABLE which affect the table structure, will wait for the lock to be released before acquiring itself an exclusive lock and then perform the change. The relation's locks are stored into the pg_locks table. This table is quite unique because have a limited number of rows. The maximum number of table's lock slot is determine with this simple formula.

max_locks_per_transaction * (max_connections + max_prepared_transactions)

The default configuration permits have only 6400 table's lock slots. This value is generally OK. However, if the database have a great number of relations, a full backup, pg_dump could hit the slot limit and fail with an out of memory error.

All the three GUC parameters require a restart to apply the new changes so is very important to plan the change before the limit is reached.

Try to use flexible formats

This is more a good practice suggestion rather a performance tip. Exporting the database in plain text have some advantages. Is possible to load the dump just using psql and any file's corruption can be managed in a simple way; if the damage is limited of course. The custom and directory formats need the pg_restore utility for the restoration. We'll take a look to this approach in 9. Anyway, the custom and directory formats have alongside with the compression, the parallel restore feature and the selective restoration. The compression can be fine tuned to suit the export's nature. In this era of ``big data'' is something to consider seriously.Sunday, 13 July 2014

Chapter 8 - Part 1, pg_dump at glance

Pretty busy week (and week end), I was able to write just those boring lines mostly derived from the pg_dump's help. I'll do better next time, I promise.

As seen in 3.1.5, pg_dump is the PostgreSQL's utility for saving consistent backups.

Its usage is quite simple and if launched without options it tries to connect to the local cluster

with the current user sending the dump to the standard output.

The pg_dump help gives useful informations about the usage.

The PGPASSWORD variable is considered not secure and shouldn't be used if untrusted users are accessing the server. The password file is a text file named .pgpass and stored in the home directory of the os user which connects to the cluster.

Each file's line specify a connection using the following format.

The -F specifies the backup format and requires a second option to tell pg_dump which format to use. The option can be one of those, c d t p which corresponds to custom directory tar plain.

If the parameter is omitted then pg_dump uses the p format. This outputs a SQL script which recreates the objects when loaded into an empty database. The format is not compressed and is suitable for direct load using the client psql.

The the custom together with the directory format is most versatile format. It offers compression and flexibility at restore time. The file offers the parallel restore functionality and the selective restore of single objects.

The directory format stores the schema dump, the dump's table of contents alongside with the compressed data dump in the directory specified with the -f switch. Each table is saved in a different file and is compressed by default.From the version 9.3 this format offers the parallel dump functionality.

The tar format stores the dump in the conservative tape archive format. This format is compatible with directory format, does not supports compression and have the 8 GB limit on the size of individual tables.

The -j option specifies the number of jobs to run in parallel for dumping the data. This feature appeared in the version 9.3 and uses the transaction's snapshot export to give a consistent data snapshot to the export jobs. The switch is usable only with the directory format and only against PostgreSQL 9.2 and later.

The option -Z specifies the compression level for the compressed formats. The default is 5 resulting in a dumped archive from 5 to 8 times smaller than the original database.

The option -lock-wait-timeout is the number of milliseconds for the table's lock acquisition. When expired the dump will fail. Is useful to avoid the program to wait forever for a table lock but can result in failed backups if set too much low.

The -a option sets the data only export. Separating schema and data have some effects at restore time, in particular with the performance. We'll see in the detail in 9 how to build an efficient two phase restore.

The -b option exports the large objects. This is the default setting except if the -n switch is used. In this case the -b is required to export the large objects.

The options -c and -C are meaningful only for the plain output format. They respectively add the DROP and CREATE command before the object's DDL. For the archive formats the same option exists for pg_restore.

The -E specifies the character encoding for the archive. If not set the origin database encoding will be used.

The -n switch is used to dump the named schema only. It's possible to specify multiple -n switches to select many schemas or using the wildcards. However despite the efforts of pg_dump to get all the dependencies resolved, something could be missing. There's no guarantee the resulting archive can be successfully restored.

The -N switch does the opposite of the -n switch. Skips the named schema. Accepts wildcards and it's possible to specify multiple schemas with multiple -N switches. When both -n and -N are given, the behavior is to dump just the schemas that match at least one -n switch but no -N switches. If -N appears without -n, then schemas matching -N are excluded from what is otherwise a normal dump.

The -o option dumps the object id as part of the table for every table. This options should be used only if the OIDs are part of the design. Otherwise this option shouldn't be used.

The -O have effects only on plain text exports and skips the object ownership set.

The -s option dumps only the database schema.

The -S option is meaningful only for plain text exports. This is the super user which disables the triggers if the export is performed with the option -disable-triggers. Anyway, as suggested on the manual, it's better to run the restoring script as superuser.

The -t switch is used to dump the named table only. It's possible to specify multiple tables using the wildcards or specifying the -t more.

The -T does the opposite, skips the named table in the dump.

The switch -x skips dumping the privileges settings usually dumped as grant/revoke commands.

The option -binary-upgrade is used only for the in place upgrade program pg_upgrade. Is not for general usage.

The option -column-inserts result in the data exported as INSERT commands with all the column names specified. Because by default the data is saved as COPY statement, using this switch will results in a bigger dump file and a very slow restoration. It's sole advantage is any error in the restore will skip just the affected row and not the entire table's load.

The -disable-dollar-quoting disables the newer dollar quoting for the function's body and uses the standard SQL quoting.

The -disable-triggers emits the triggers disable and re enable for the data only export. Disabling the triggers ensure the foreign keys won't fail for the missing referenced data. This switch is meaningful only for the plain text export.

The -exclude-table-data=TABLE skips the data for the named table but dumps the table's definition. It follow the same rules of the -t and -T for specifying multiple tables.

The -insert dumps the data as INSERT command. Same as the -column-inserts the restore is very slow and should be used only for reloading data into non-PostgreSQL databases.

The -no-security-labels doesn't include the security labels into the dump file.

The -no-synchronized-snapshots allows the parallel export against pre 9.2 databases. Because the snapshot export feature is missing this means the database shall not change its status meanwhile the export is running. Otherwise there will be a not consistent data export. If in doubt do not use this option.

The -no-tablespaces skips the tablespace assignments.

The -no-unlogged-table-data does not export data for the unlogged relations.

The -quote-all-identifiers cause all the identifiers to be enclosed in double quotes. By default only the identifiers with keyword's name are quoted.

The -section option specifies one of the three export's sections. The pre-data, with the table, the view and the function definitions. The data section where the actual table data is dumped as COPY or INSERTS, accordingly with the command line options. The post-data section where the constraint, the index and the eventual GRANT REVOKE commands are finally executed. This switch is meaningful only for text exports.

The -serializable-deferrable uses a serializable transaction for the dump, to ensure the database state is consistent. The dump execution waits for a point in the transaction stream without anomalies to avoid the risk of the dump failing or causing other transactions to abort for the serialization_failure. The option is not beneficial for a dump intended only for disaster recovery. It's useful for the dump used for reloading the data into a read only database which needs to have a consistent state compatible with the origin's database.

The switch -use-set-session-authorization causes the usage of SET SESSION AUTHORIZATION commands instead of ALTER OWNER commands to set the objects ownership. The resulting dump is more standards compatible but the SET SESSION AUTHORIZATION command requires the super user privileges whereas ALTER OWNER doesn't.

Backup

The hardware is subject to faults. In particular if the storage is lost the entire data infrastructure becomes inaccessible, sometime for good. Also human errors, like not filtered delete or table drop can happen. Having a solid backup strategy is then the best protection, ensuring the data is still recoverable. In this chapter we'll take a look only to the logical backup with pg_dump.

pg_dump at glance

As seen in 3.1.5, pg_dump is the PostgreSQL's utility for saving consistent backups.

Its usage is quite simple and if launched without options it tries to connect to the local cluster

with the current user sending the dump to the standard output.

The pg_dump help gives useful informations about the usage.

postgres@tardis:~/dump$ pg_dump --help

pg_dump dumps a database as a text file or to other formats.

Usage:

pg_dump [OPTION]... [DBNAME]

General options:

-f, --file=FILENAME output file or directory name

-F, --format=c|d|t|p output file format (custom, directory, tar,

plain text (default))